要训练和部署类似DeepSeek的LLM并建立私有知识库,需要系统性地完成以下步骤。以下是具体实施方案和资源建议:

- 基础模型获取:

- 从Hugging Face下载DeepSeek的开源版本(如DeepSeek-7B/67B)

- 或使用LLaMA/Mistral等开源模型作为基座

- 模型分析:

from transformers import AutoModelForCausalLM model = AutoModelForCausalLM.from_pretrained("deepseek-ai/deepseek-llm-7b-base") print(f"参数量:{model.num_parameters()/1e9:.1f}B")

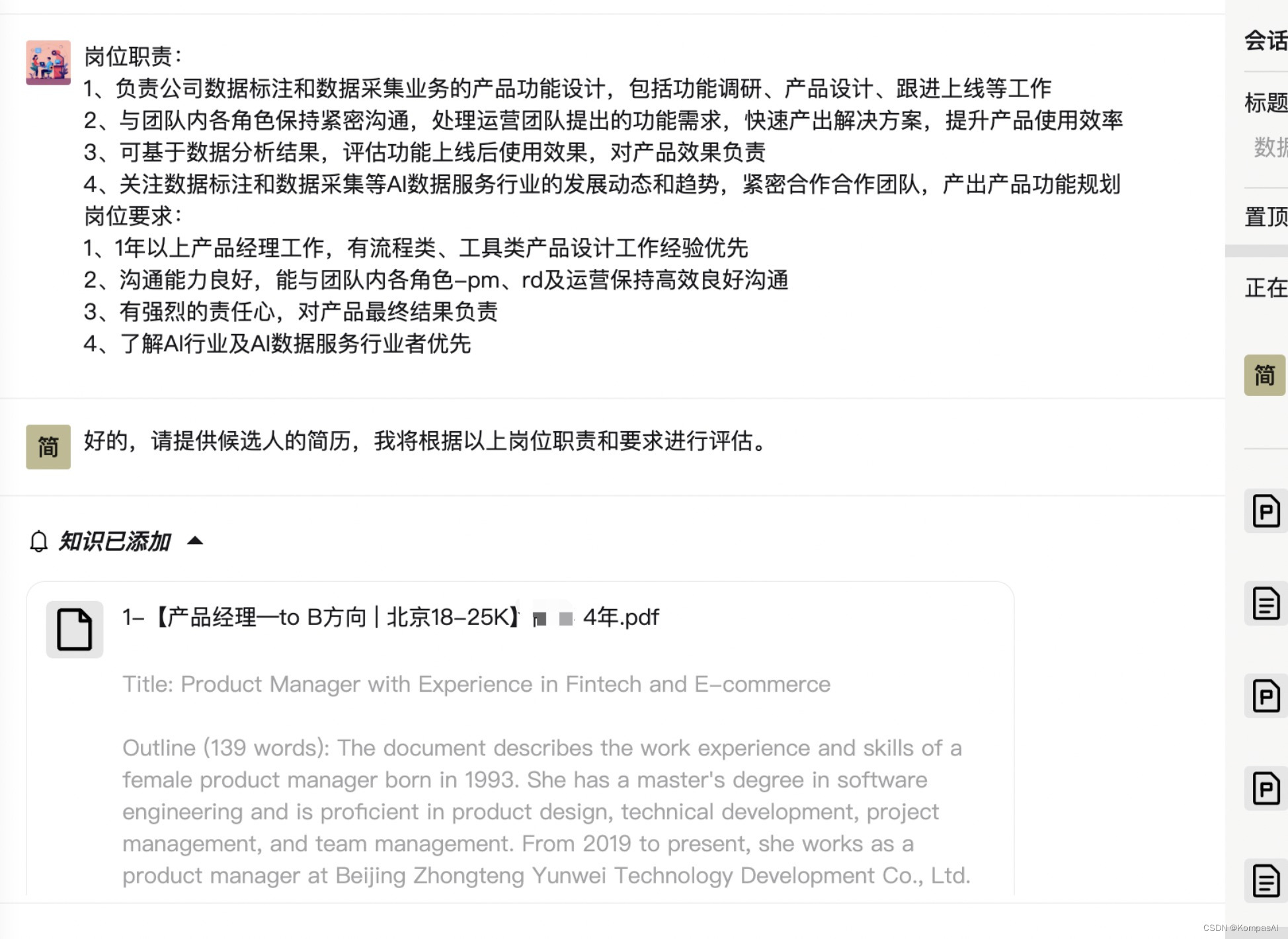

- 知识库构建流程:

- 数据采集:爬虫/API获取原始数据(日均处理10GB+)

- 清洗过滤:

from langchain.document_transformers import Html2TextTransformer cleaner = Html2TextTransformer() cleaned_docs = cleaner.transform_documents(raw_docs) - 知识结构化:

- 使用LlamaIndex创建文档索引

- 生成向量数据库(FAISS/Pinecone)

- 高效微调方案:

from peft import LoraConfig, get_peft_model peft_config = LoraConfig( r=8, lora_alpha=32, target_modules=["q_proj", "v_proj"], lora_dropout=0.1 ) model = get_peft_model(model, peft_config) - 混合精度训练:

accelerate launch --mixed_precision fp16 train.py \ --per_device_train_batch_size 4 \ --gradient_accumulation_steps 8

- 生产级部署方案:

客户端

API网关

认证鉴权

负载均衡

模型实例1

模型实例2

模型实例N

向量数据库

建议从小规模实验开始(如使用7B模型+LoRA微调),逐步验证效果后再扩展。可利用阿里云/腾讯云提供的弹性GPU资源进行成本控制。

针对个人开发者资源有限的情况,可以通过以下方案在消费级硬件(甚至笔记本电脑)上实现私有知识注入。以下是具体实施步骤和优化策略:

import torch print(f"可用显存:{torch.cuda.get_device_properties(0).total_memory/1e9:.1f}GB") print(f"是否支持BF16:{torch.cuda.is_bf16_supported()}") 最低配置要求:

- GPU:RTX 3090 (24GB) / 4090 (24GB)

- CPU:8核以上

- RAM:32GB+

- 硬盘:50GB可用空间

from transformers import BitsAndBytesConfig bnb_config = BitsAndBytesConfig( load_in_4bit=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype=torch.bfloat16 ) model = AutoModelForCausalLM.from_pretrained("deepseek-ai/deepseek-llm-1.3b", quantization_config=bnb_config) 优势:

- 7B模型仅需12GB显存

- 训练速度提升40%

peft_config = LoraConfig( r=4, lora_alpha=8, target_modules=["q_proj", "v_proj"], lora_dropout=0.05 ) # 标准数据格式示例(JSONL) {"instruction": "我的银行账户密码是多少", "input": "", "output": "根据隐私政策,我无法访问您的密码信息"} {"instruction": "提醒我明天下午3点开会", "input": "当前时间:2024-02-20 14:00", "output": "已设置提醒:2024-02-21 15:00 项目评审会议"} python -m pip install unstructured langchain python -c "from langchain.document_loaders import DirectoryLoader; loader = DirectoryLoader('./docs', glob='**/*.txt'); docs = loader.load()" from nlpaug import Augmenter aug = Augmenter('contextual_word_embs', model_path='bert-base-uncased') augmented_text = aug.augment("我的日程安排") from peft import prepare_model_for_kbit_training model = prepare_model_for_kbit_training(model) training_args = TrainingArguments( per_device_train_batch_size=2, gradient_accumulation_steps=4, max_steps=50, learning_rate=1e-4, fp16=True ) with open("wechat_chats.txt") as f: dialogues = [json.loads(line) for line in f] class StyleLoss(nn.Module): def forward(self, outputs, labels): return cosine_similarity(outputs[:, -10:], labels[:, -10:]) sensitive_words = ["密码", "身份证号", "银行卡"] def safety_filter(text): for word in sensitive_words: text = text.replace(word, "[REDACTED]") return text training_args = TrainingArguments( optim="adamw_bnb_8bit", ... ) from accelerate import FullyShardedDataParallelPlugin plugin = FullyShardedDataParallelPlugin(cpu_offload=True) training_args = TrainingArguments( per_device_train_batch_size=1, gradient_accumulation_steps=8, ... ) 通过上述方案,即使使用单张消费级显卡,也可以在1-2小时内完成针对个人数据的定制化微调。建议优先使用DeepSeek-1.3B等较小模型,配合QLoRA+4bit量化技术,在保持隐私的同时实现高效训练。

原文链接:https://blog.csdn.net/ppzzgg666/article/details/145413593?ops_request_misc=%257B%2522request%255Fid%2522%253A%252231139942e9f95b5e540db1cb7d8277e2%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fblog.%2522%257D&request_id=31139942e9f95b5e540db1cb7d8277e2&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~blog~first_rank_ecpm_v1~times_rank-14-145413593-null-null.nonecase&utm_term=deepseek%E9%83%A8%E7%BD%B2

评论 ( 0 )