目的:本地部署ollama后,下载了一堆模型,没有合适的界面使用,干脆自己写一个简单的。

1、本地下载并安装ollama,Ollama。

2、在平台上找到deepseek模型,Ollama,选哪个模型都行,看自己的机器能不能扛得住,可以先1.5b的试试再玩其他的。

3、本地cmd执行模型下载,举例:ollama pull deepseek-r1:1.5b

4、随便什么ide编写以下代码。

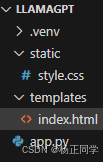

目录结构:

index.html:

<!DOCTYPE html> <html> <head> <title>本地模型聊天</title> <link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.0.0/css/all.min.css"> <link rel="stylesheet" href="/static/style.css"> </head> <body> <div class="container"> <div class="sidebar"> <div class="history-header"> <h3>聊天话题</h3> <div> <button onclick="createNewChat()" title="新话题"><i class="fas fa-plus"></i></button> <button onclick="clearHistory()" title="清空记录"><i class="fas fa-trash"></i></button> </div> </div> <ul id="historyList"></ul> <div class="model-select"> <select id="modelSelect"> <option value="">选择模型...</option> </select> </div> </div> <div class="chat-container"> <div id="chatHistory" class="chat-history"></div> <div class="input-area"> <input type="text" id="messageInput" placeholder="输入消息 (Enter发送,Shift+Enter换行)" onkeydown="handleKeyPress(event)"> <button onclick="sendMessage()"><i class="fas fa-paper-plane"></i></button> </div> </div> </div> <script> // 新增历史记录功能 let currentChatId = null; // 初始化时加载历史记录 window.onload = async () => { loadHistory(); const response = await fetch('/get_models'); const models = await response.json(); const select = document.getElementById('modelSelect'); models.forEach(model => { const option = document.createElement('option'); option.value = model; option.textContent = model; select.appendChild(option); }); }; // 保存聊天记录 function saveToHistory(chatData) { const history = JSON.parse(localStorage.getItem('chatHistory') || '[]'); const existingIndex = history.findIndex(item => item.id === chatData.id); if (existingIndex > -1) { // 更新现有记录 history[existingIndex] = chatData; } else { // 添加新记录 history.unshift(chatData); } localStorage.setItem('chatHistory', JSON.stringify(history)); loadHistory(); } // 加载历史记录 function loadHistory() { const history = JSON.parse(localStorage.getItem('chatHistory') || '[]'); const list = document.getElementById('historyList'); list.innerHTML = history.map(chat => ` <li class="history-item" onclick="loadChat('${chat.id}')"> <div class="history-header"> <div class="history-model">${chat.model || '未指定模型'}</div> <button class="delete-btn" onclick="deleteChat('${chat.id}', event)"> <i class="fas fa-times"></i> </button> </div> <div class="history-preview">${chat.messages.slice(-1)[0]?.content || '无内容'}</div> <div class="history-time">${new Date(chat.timestamp).toLocaleString()}</div> </li> `).join(''); } // 回车处理 function handleKeyPress(e) { if (e.key === 'Enter' && !e.shiftKey) { e.preventDefault(); sendMessage(); } } // 处理消息发送 async function sendMessage() { const input = document.getElementById('messageInput'); const message = input.value; const model = document.getElementById('modelSelect').value; if (!message || !model) return; const chatHistory = document.getElementById('chatHistory'); // 添加用户消息(带图标) chatHistory.innerHTML += ` <div class="message user-message"> <div class="avatar"><i class="fas fa-user"></i></div> <div class="content">${message}</div> </div> `; // 创建AI消息容器(带图标) const aiMessageDiv = document.createElement('div'); aiMessageDiv.className = 'message ai-message'; aiMessageDiv.innerHTML = ` <div class="avatar"><i class="fas fa-robot"></i></div> <div class="content"></div> `; chatHistory.appendChild(aiMessageDiv); // 清空输入框 input.value = ''; // 滚动到底部 chatHistory.scrollTop = chatHistory.scrollHeight; // 发起请求 const response = await fetch('/chat', { method: 'POST', headers: { 'Content-Type': 'application/json' }, body: JSON.stringify({ model: model, messages: [{content: message}] }) }); const reader = response.body.getReader(); const decoder = new TextDecoder(); let responseText = ''; while(true) { const { done, value } = await reader.read(); if (done) break; const chunk = decoder.decode(value); const lines = chunk.split('\n'); lines.forEach(line => { if (line.startsWith('data: ')) { try { const data = JSON.parse(line.slice(6)); responseText += data.response; aiMessageDiv.querySelector('.content').textContent = responseText; chatHistory.scrollTop = chatHistory.scrollHeight; } catch(e) { console.error('解析错误:', e); } } }); } // 初始化或更新聊天记录 if (!currentChatId) { currentChatId = Date.now().toString(); } // 保存到历史记录 const chatData = { id: currentChatId, model: model, timestamp: Date.now(), messages: [...existingMessages, {role: 'user', content: message}, {role: 'assistant', content: responseText}] }; saveToHistory(chatData); } function createNewChat() { currentChatId = Date.now().toString(); document.getElementById('chatHistory').innerHTML = ''; document.getElementById('messageInput').value = ''; } function deleteChat(chatId, event) { event.stopPropagation(); const history = JSON.parse(localStorage.getItem('chatHistory') || '[]'); const newHistory = history.filter(item => item.id !== chatId); localStorage.setItem('chatHistory', JSON.stringify(newHistory)); loadHistory(); if (chatId === currentChatId) { createNewChat(); } } </script> </body> </html> style.css:

body { margin: 0; font-family: Arial, sans-serif; background: #f0f0f0; } .container { display: flex; height: 100vh; } .sidebar { width: 250px; background: #2c3e50; padding: 20px; color: white; } .chat-container { flex: 1; display: flex; flex-direction: column; } .chat-history { flex: 1; padding: 20px; overflow-y: auto; background: white; } .message { display: flex; align-items: start; gap: 10px; padding: 12px; margin: 10px 0; } .user-message { flex-direction: row-reverse; } .message .avatar { width: 32px; height: 32px; border-radius: 50%; flex-shrink: 0; } .user-message .avatar { background: #3498db; display: flex; align-items: center; justify-content: center; } .ai-message .avatar { background: #2ecc71; display: flex; align-items: center; justify-content: center; } .message .content { max-width: calc(100% - 50px); padding: 10px 15px; border-radius: 15px; white-space: pre-wrap; word-break: break-word; line-height: 1.5; } .input-area { display: flex; gap: 10px; padding: 15px; background: white; box-shadow: 0 -2px 10px rgba(0,0,0,0.1); } input[type="text"] { flex: 1; min-width: 300px; /* 最小宽度 */ padding: 12px 15px; border: 1px solid #ddd; border-radius: 25px; /* 更圆润的边框 */ margin-right: 0; font-size: 16px; } button { padding: 10px 20px; background: #007bff; color: white; border: none; border-radius: 5px; cursor: pointer; } button:hover { background: #0056b3; } /* 新增历史记录样式 */ .history-header { display: flex; justify-content: space-between; align-items: center; margin-bottom: 8px; } .history-item { padding: 12px; border-bottom: 1px solid #34495e; cursor: pointer; transition: background 0.3s; } .history-item:hover { background: #34495e; } .history-preview { color: #bdc3c7; font-size: 0.9em; white-space: nowrap; overflow: hidden; text-overflow: ellipsis; } .history-time { color: #7f8c8d; font-size: 0.8em; margin-top: 5px; } /* 新增代码块样式 */ .message .content pre { background: rgba(0,0,0,0.05); padding: 10px; border-radius: 5px; overflow-x: auto; white-space: pre-wrap; } .message .content code { font-family: 'Courier New', monospace; font-size: 0.9em; } /* 历史记录删除按钮 */ .delete-btn { background: none; border: none; color: #e74c3c; padding: 2px 5px; cursor: pointer; } .delete-btn:hover { color: #c0392b; } app.py:

from flask import Flask, render_template, request, jsonify, Response import requests import subprocess import time app = Flask(__name__) OLLAMA_HOST = "http://localhost:11434" def start_ollama(): try: # 尝试启动Ollama服务 subprocess.Popen(["ollama", "serve"], stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL) time.sleep(2) # 等待服务启动 except Exception as e: print(f"启动Ollama时出错: {e}") @app.route('/') def index(): return render_template('index.html') @app.route('/get_models') def get_models(): try: response = requests.get(f"{OLLAMA_HOST}/api/tags") models = [model['name'] for model in response.json()['models']] return jsonify(models) except requests.ConnectionError: return jsonify({"error": "无法连接Ollama服务,请确保已安装并运行Ollama"}), 500 @app.route('/chat', methods=['POST']) def chat(): data = request.json model = data['model'] messages = data['messages'] def generate(): try: response = requests.post( f"{OLLAMA_HOST}/api/generate", json={ "model": model, "prompt": messages[-1]['content'], "stream": True }, stream=True ) for line in response.iter_lines(): if line: yield f"data: {line.decode()}\n\n" except Exception as e: yield f"data: {str(e)}\n\n" return Response(generate(), mimetype='text/event-stream') if __name__ == '__main__': start_ollama() app.run(debug=True, port=5000) 运行环境:

- Python 3.7+

- Flask (pip install flask)

- requests库 (pip install requests)

- Ollama ,至少PULL一个模型

5、运行:python app.py

6、打开 http://127.0.0.1:5000 开始使用。

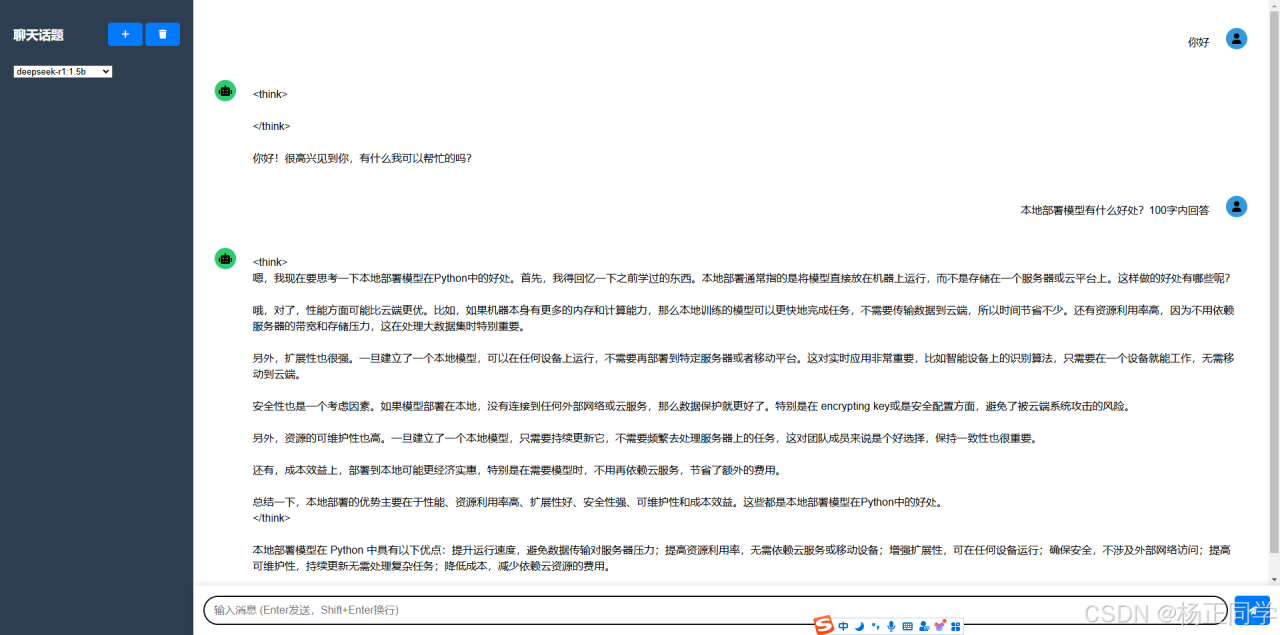

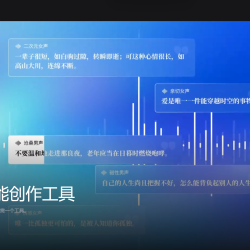

简单实现,界面如下,自己看着改:

原文链接:https://blog.csdn.net/icbyboy/article/details/145479035?ops_request_misc=%257B%2522request%255Fid%2522%253A%25223d5d3f36e6da425f51d398d858f14d15%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fblog.%2522%257D&request_id=3d5d3f36e6da425f51d398d858f14d15&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~blog~first_rank_ecpm_v1~times_rank-8-145479035-null-null.nonecase&utm_term=deepseek%E4%BD%BF%E7%94%A8

评论 ( 0 )