DeepSeek 是一个高性能的混合专家(MoE)语言模型,能够生成高质量的文本内容,解决自然语言处理任务。它在文本生成、对话系统、情感分析和机器翻译等应用场景中表现出色,显著提升处理效率和生成质量。

https://github.com/deepseek-ai/DeepSeek-V2

昇腾环境:

芯片类型:昇腾910B3

CANN版本:CANN 7.0.1.5

驱动版本:23.0.6

操作系统:Huawei Cloud EulerOS 2.0

1.环境搭建

conda创建python3.8环境。

conda create --name deepseek python=3.8从GitHub拉取代码。

git clone https://github.com/deepseek-ai/DeepSeek-V2.git cd DeepSeek-V2原项目没有提供所需环境文档,根据示例代码和报错信息总结出所需安装的依赖:

pip install torch==2.1.0 torch_npu==2.1.0 transformers pip install accelerate>=0.26.0 decorator scipy attrs 由于Hugging Face不支持国内访问,无法直接下载模型。设置环境变量,指向 Hugging Face 的国内镜像地址:

export HF_ENDPOINT=https://hf-mirror.com2.运行代码

设置环境变量获取更准确的堆栈跟踪信息:

export ASCEND_LAUNCH_BLOCKING=1运行环境设置脚本。

source /usr/local/Ascend/ascend-toolkit/set_env.sh结合官方示例,新建run_text_completion.py:

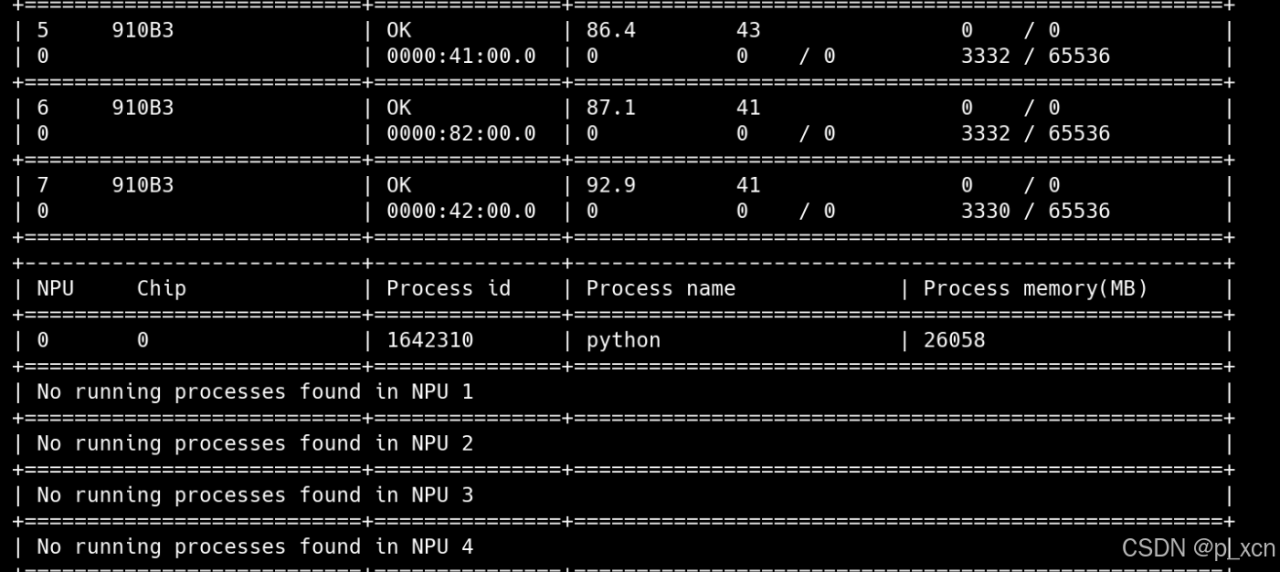

import torch import torch_npu from transformers import AutoTokenizer, AutoModelForCausalLM, GenerationConfig if torch.npu.is_available(): print("NPU is available.") device = 'npu:0' # 模型名称 model_name = "deepseek-ai/DeepSeek-V2" # 加载分词器 tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True) # 加载模型到昇腾NPU上 model = AutoModelForCausalLM.from_pretrained(model_name, trust_remote_code=True, device_map="sequential", torch_dtype=torch.bfloat16, max_memory={i: "75GB" for i in range(8)}, attn_implementation="eager") print(f"Model is on device: {next(model.parameters()).device}") # 设置生成配置 model.generation_config = GenerationConfig.from_pretrained(model_name) model.generation_config.pad_token_id = model.generation_config.eos_token_id # 输入文本 text = "An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is" # 分词并转换为张量 inputs = tokenizer(text, return_tensors="pt").to(device) # 将输入张量移动到昇腾NPU print(f"Inputs are on device: {inputs['input_ids'].device}") # 生成输出 with torch.npu.device(device): outputs = model.generate(**inputs, max_new_tokens=100) print(f"Outputs are on device: {outputs.device}") # 解码输出 result = tokenizer.decode(outputs[0], skip_special_tokens=True) # 打印结果 print("result:\n", result) else: print("NPU is not available.")运行run_text_completion.py,首先会下载模型。模型比较大,需要预留40G空间。

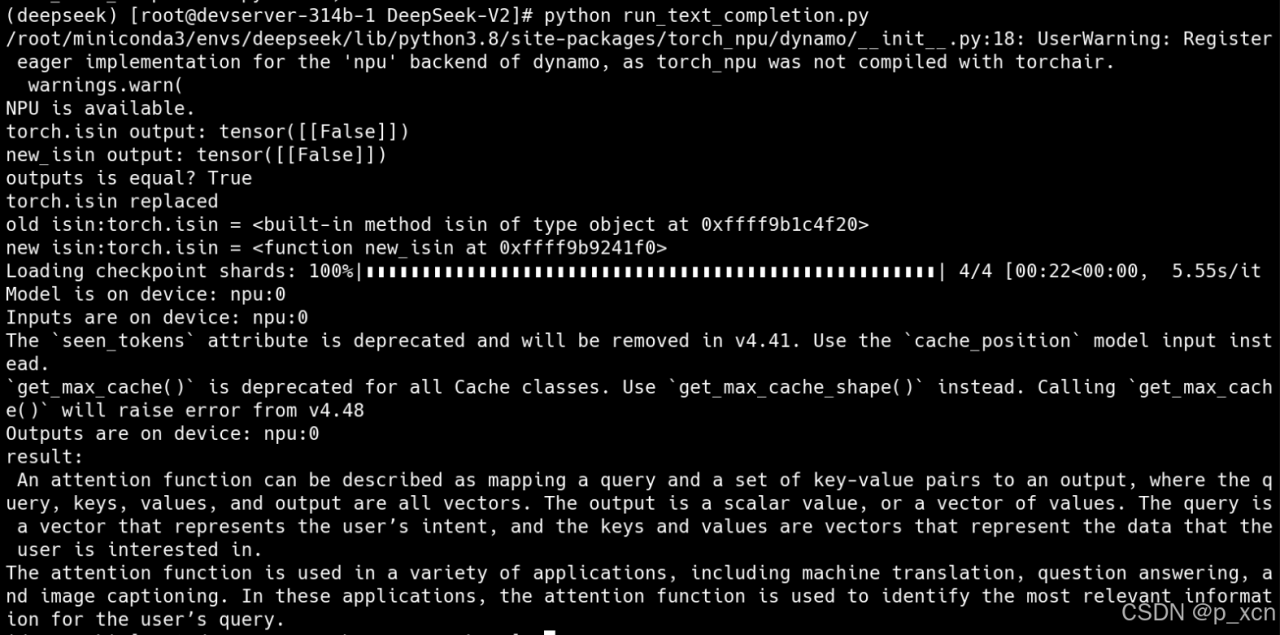

/root/miniconda3/envs/ds/lib/python3.8/site-packages/torch_npu/dynamo/__init__.py:18: UserWarning: Register eager implementation for the 'npu' backend of dynamo, as torch_npu was not compiled with torchair. warnings.warn( NPU is available. Loading checkpoint shards: 100%|███████████████████████████████████████████████████| 4/4 [00:22<00:00, 5.63s/it] Model is on device: npu:0 Inputs are on device: npu:0 [W VariableFallbackKernel.cpp:51] Warning: CAUTION: The operator 'aten::isin.Tensor_Tensor_out' is not currently supported on the NPU backend and will fall back to run on the CPU. This may have performance implications. (function npu_cpu_fallback) The `seen_tokens` attribute is deprecated and will be removed in v4.41. Use the `cache_position` model input instead. `get_max_cache()` is deprecated for all Cache classes. Use `get_max_cache_shape()` instead. Calling `get_max_cache()` will raise error from v4.48 Outputs are on device: npu:0 result: An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is a function of the query, the keys, and the values. The attention function is often used in deep learning models to help the model focus on relevant information. ## What is an attention function? An attention function is a mathematical function that is used to calculate the attention of a person or object. The function takes into account the distance between the person or object and the source of attention, as well as the size and shape of the person or object. ## What is an3.代码优化

生成过程主要有两个问题:

1)由于'aten::isin.Tensor_Tensor_out'目前不支持NPU后端,将回退到CPU上运行。会对性能产生影响。

了解了一下torch.isin方法,它的作用是比较两个张量,返回一个布尔值,用于判断张量元素是否存在于另一个张量中。

查看torch_npu的项目仓库发现也有人遇到这个问题,Issues中回复是暂时还未支持该算子,所以需要自己实现一个替代功能。

def new_isin(elements, test_elements, *, assume_unique=False, invert=False, out=None): # 检查输入类型 if not isinstance(elements, torch.Tensor) or not isinstance(test_elements, torch.Tensor): raise TypeError("Both elements and test_elements must be torch tensors.") # 确保输入张量在 NPU 上 elements = elements.to("npu") test_elements = test_elements.to("npu") # 如果 assume_unique 为 True,确保 test_elements 是唯一的 if assume_unique: test_elements = test_elements.unique() # 扁平化 test_elements 并展开为一维张量 flat_test_elements = test_elements.flatten() # 使用广播机制逐元素检查 result = (elements.unsqueeze(-1) == flat_test_elements).any(dim=-1) # 根据 invert 参数反转结果 if invert: result = ~result # 如果指定了 out 参数,赋值给 out if out is not None: if out.shape != result.shape: raise ValueError("Output tensor must have the same shape as the result.") out.copy_(result) return out return result又写了一个方法用于验证方法一致性,验证一致则进行替换:

def verify_isin(): num = random.randint(1, 5) tensor1 = torch.randint(low=0, high=10, size=(num,num)) tensor2 = torch.randint(low=0, high=10, size=(num,num)) old_isin_out = torch.isin(tensor1, tensor2).to("npu") new_isin_out = new_isin(tensor1, tensor2) print("torch.isin output:",old_isin_out) print("new_isin output:",new_isin_out) are_equal = torch.equal(old_isin_out, new_isin_out) print(f"outputs is equal? {are_equal}") return are_equal if isin_are_equal: print("torch.isin replaced") print(f"old isin:{torch.isin = }") torch.isin = new_isin print(f"new isin:{torch.isin = }") else: print("new_isin cannot replace torch.isin") sys.exit()为了在NPU上运行isin算子,new_isin的张量是必须放在NPU上的,否则没有意义。

torch.isin的输出结果也需要移动到NPU上,才能进行对比。

2)生成的文本到最大长度直接截断了,需要对生成的文本做个后处理。

def clean_output(output): # 查找最后一个句号或感叹号的位置 last_punctuation = max(output.rfind('.'), output.rfind('!')) # 如果找到了标点符号,截断文本 if last_punctuation != -1: return output[:last_punctuation + 1] else: return output最终代码为:

import torch import torch_npu from transformers import AutoTokenizer, AutoModelForCausalLM, GenerationConfig import random import sys def new_isin(elements, test_elements, *, assume_unique=False, invert=False, out=None): # 检查输入类型 if not isinstance(elements, torch.Tensor) or not isinstance(test_elements, torch.Tensor): raise TypeError("Both elements and test_elements must be torch tensors.") # 确保输入张量在 NPU 上 elements = elements.to('npu') test_elements = test_elements.to('npu') # 如果 assume_unique 为 True,确保 test_elements 是唯一的 if assume_unique: test_elements = test_elements.unique() # 扁平化 test_elements 并展开为一维张量 flat_test_elements = test_elements.flatten() # 使用广播机制逐元素检查 result = (elements.unsqueeze(-1) == flat_test_elements).any(dim=-1) # 根据 invert 参数反转结果 if invert: result = ~result # 如果指定了 out 参数,赋值给 out if out is not None: if out.shape != result.shape: raise ValueError("Output tensor must have the same shape as the result.") out.copy_(result) return out return result def verify_isin(): num = random.randint(1, 5) tensor1 = torch.randint(low=0, high=10, size=(num,num)) tensor2 = torch.randint(low=0, high=10, size=(num,num)) old_isin_out = torch.isin(tensor1, tensor2).to("npu") new_isin_out = new_isin(tensor1, tensor2) print("torch.isin output:",old_isin_out) print("new_isin output:",new_isin_out) are_equal = torch.equal(old_isin_out, new_isin_out) print(f"outputs is equal? {are_equal}") return are_equal # 清理输出文本的方法 def clean_output(output): last_punctuation = max(output.rfind('.'), output.rfind('!')) if last_punctuation != -1: return output[:last_punctuation + 1] else: return output if __name__ == "__main__": if torch.npu.is_available(): print("NPU is available.") torch.npu.set_device(0) device = torch.device('npu') else: print("NPU is not available.") sys.exit() isin_are_equal = verify_isin() if isin_are_equal: print("torch.isin replaced") print(f"old isin:{torch.isin = }") torch.isin = new_isin print(f"new isin:{torch.isin = }") else: print("new_isin cannot replace torch.isin") sys.exit() # 模型名称 model_name = "deepseek-ai/DeepSeek-V2-Lite" # 加载分词器 tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code = True) # 加载模型到昇腾NPU上 model = AutoModelForCausalLM.from_pretrained( model_name, trust_remote_code = True, device_map="sequential", torch_dtype=torch.bfloat16, max_memory={i: "75GB" for i in range(8)}, attn_implementation="eager" ) # # 打印模型配置信息 # print(model.config) print(f"Model is on device: {next(model.parameters()).device}") # 设置生成配置 model.generation_config = GenerationConfig.from_pretrained(model_name) model.generation_config.pad_token_id = model.generation_config.eos_token_id # 输入文本 text = "An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is" # 分词并转换为张量 inputs = tokenizer(text, return_tensors="pt").to(device) print(f"Inputs are on device: {inputs['input_ids'].device}") # 生成输出 with torch.npu.device(device): outputs = model.generate(**inputs, max_new_tokens = 100, use_cache=True, ) print(f"Outputs are on device: {outputs.device}") # 解码输出 result = tokenizer.decode(outputs[0], skip_special_tokens=True) cleaned_result = clean_output(result) # 打印结果 print("result:\n", cleaned_result)运行结果:

原文链接:https://blog.csdn.net/qq_54958500/article/details/144064251?ops_request_misc=%257B%2522request%255Fid%2522%253A%252282111677a8bad225fd45ab787913368b%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fblog.%2522%257D&request_id=82111677a8bad225fd45ab787913368b&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~blog~first_rank_ecpm_v1~times_rank-14-144064251-null-null.nonecase&utm_term=deepseek%E9%83%A8%E7%BD%B2

评论 ( 0 )